CDC Cyber 72

The first computing machine was launched at Cyfronet on June 27, 1975. It was one of two CDC CYBER 72 computers purchased by Poland in 1973: the first was installed at the Institute for Nuclear Research in Świerk near Warsaw, and the second at Cyfronet (then known as the CYFRONET-Kraków Academic Computing Center). There was a two-year gap between the two installations, mainly due to embargo-related issues affecting socialist countries, including Poland. Universities and research institutes in both cities were equipped with terminals consisting of a punched card reader and a line printer, allowing remote program execution and result retrieval in printed form.

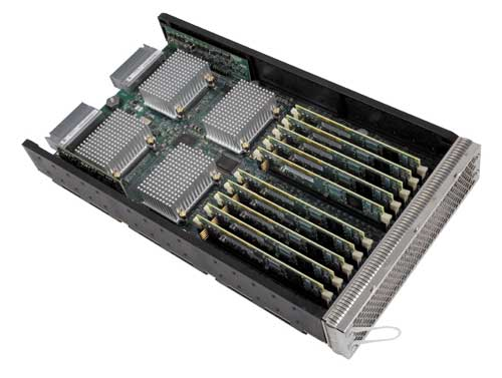

The supercomputer installed at Cyfronet, manufactured by the American company Control Data Corporation (CDC), was based on the 6400 series architecture. The remarkably high computing speed for that time was achieved through the system’s multiprocessor structure and multi-threaded computing technique. The central unit of the CYFRONET-Kraków computing system had one central processing unit (CPU), which was controlled by ten peripheral processing units (PPUs). All data transfers between the main memory and peripheral devices were handled by the PPUs. These processors performed basic arithmetic and logical operations, preparing and assigning tasks to the central processor, which allowed for its most efficient use. The average processing speed of the central unit was one million operations per second.

Configuration of the Cyber 72 system at ŚCO CYFRONET-Kraków Computing Center

- 1 central processor with 98,324 words of main memory (60-bit word, 1 μs memory cycle),

- 10 peripheral processors with 4,096 words of memory (12-bit),

- 12 input/output (I/O) channels,

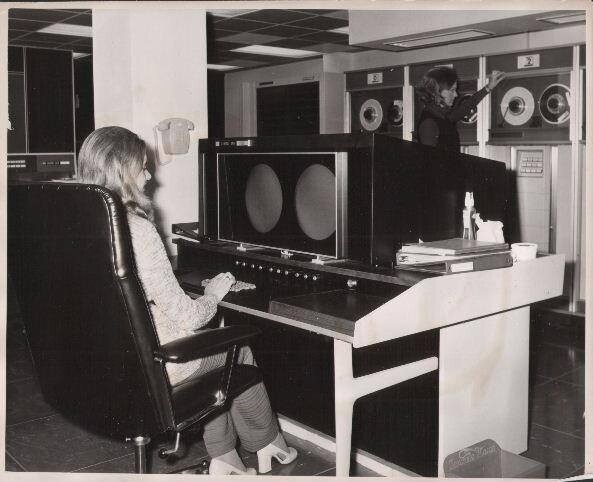

- Operator console, dual-screen, equipped with a keyboard,

- Card reader capable of reading 1,200 cards per minute,

- Line printer printing 1,200 lines per minute (136 characters per line),

- Card punch (250 cards per minute),

- The computer was initially equipped with three type 841 disks, each with a capacity of 2×40 MB; in 1979, they were replaced with three type 844 disks, each with a capacity of 100 MB.