Cyfronet has provided the computing resources of the two fastest supercomputers in Poland – Helios and Athena – to create Bielik – a Polish language model.

Bielik-11B-v2 – a new Polish large language model

Bielik was created as a result of the work of a team operating within the SpeakLeash Foundation and the ACC Cyfronet AGH and is a Polish model from the LLM category (Large Language Models), i.e. a large language model with 11 billion parameters.

SpeakLeash – a group of enthusiasts and creators of Bielik

SpeakLeash is a foundation that has brought together people from very different professions. The group of enthusiasts has set itself the goal of creating the largest Polish text dataset, modeled on foreign initiatives such as The Pile. The project team consists primarily of employees of Polish enterprises, researchers from research centers and students of fields related to artificial intelligence. The team's work on the Polish language model lasted over a year, and its initial scope included, among others, data collection, processing and classification.

– The most difficult task was to obtain data in Polish. We must operate only on source data, for which we are certain of their origin – explains Bielik's originator, Sebastian Kondracki from SpeakLeash.

Currently, the resources of the SpeakLeash foundation are the largest, best described and documented data set in Polish.

Helios and Athena – computing power for science

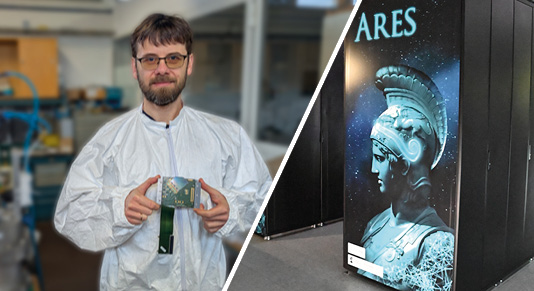

Supercomputers from the Academic Computer Center Cyfronet AGH allowed the Bielik project to spread its wings.

The cooperation of the AGH staff with the Speakleash foundation enabled the use of appropriate computing power necessary to create the model and support the SpeakLeash team with the necessary expert and scientific knowledge, guaranteeing the success of the joint project.

The support of the ACC Cyfronet team concerned the optimization and scaling of training processes, work on data processing pipelines and the development and operation of synthetic data generation methods, as well as work on model testing methods. The result is the Polish model ranking (Polish OpenLLM Leaderboard). Valuable experience and knowledge gathered as a result of this cooperation allowed the PLGrid expert team to prepare guidelines and optimized solutions, including computational environments for working with language models based on Athena and Helios clusters for the needs of scientific users.

– We used the resources of Helios, the fastest machine in Poland at the moment, to train language models – specifies Marek Magryś, Deputy Director of ACC Cyfronet AGH for High-Performance Computing. – Our role is to support the process of cataloging, collecting, processing data with expert knowledge, experience and, above all, computing power, and to jointly conduct the process of training language models. Thanks to the work of the SpeakLeash and AGH teams, we managed to create Bielik, an LLM model that perfectly copes with our language and cultural context and which can be a key element of text data processing chains for our language in scientific and business applications. The quality of Bielik is confirmed by the high positions obtained by the model on ranking lists for the Polish language.

The computing power of Helios and Athena in traditional computer simulations is a total of over 44 PFLOPS, and for calculations in the field of artificial intelligence in lower precision it is as much as 2 EFLOPS.

– If we operate on such large data as in the case of the Bielik project, the infrastructure needed for work obviously exceeds the capabilities of an ordinary computer. We must have the computing power needed only to prepare data, compare it with each other, and train models. The barrier of availability of this type of supercomputers means that few companies are able to conduct such work on their own. Fortunately, AGH has such a background – explains Prof. Kazimierz Wiatr, Director of ACC Cyfronet AGH.

At the same time, the supercomputer resources of ACC Cyfronet AGH are used by several thousand scientists representing many fields. Advanced modeling and numerical calculations are used mainly in the field of: chemistry, biology, physics, medicine and material technology, as well as astronomy, geology and environmental protection. The supercomputers in Cyfronet available within the PLGrid infrastructure are also used for the needs of high energy physics (ATLAS, LHCb, ALICE and CMS projects), astrophysics (CTA, LOFAR), Earth sciences (EPOS), the European Spallation Source (ESS), gravitational wave research (LIGO/Virgo) or biology (WeNMR).

– We use the two fastest supercomputers in Poland, Athena and Helios, to train Bielik, but even so, compared to the infrastructure of world leaders, we have much smaller resources. In addition, several hundred other users use the resources of supercomputers at the same time – explains Marek Magryś. – Our systems, however, allow for calculations to be carried out in a few hours or days that could take years or, in some cases, even centuries on ordinary computers.

Bielik and chat GPT – basic differences

– The dataset feeding Bielik is constantly growing, but it will be difficult for us to compete with the resources used by other models that function in English. In addition, the amount of content on the Internet that functions in Polish is much smaller than in English – explain the creators.

The most popular product using a large language model is ChatGPT, which was created based on OpenAI resources. However, the need to develop language models in various other languages is justified.

Marek Magryś, Deputy Director of ACC Cyfronet AGH for High-Performance Computers emphasizes:

– While ChatGPT can speak Polish, it is saturated with content in English. As a result, it has little understanding of, for example, Polish culture or the nuances of Polish literature. It also does not fully understand the logic of more complex texts, such as legal or medical texts. If we wanted to use it in these specialist areas and have a language model that reasons well in Polish and responds with correct Polish, we cannot rely solely on foreign language models.

The version that users can test is maintained free of charge in the public domain and is constantly being improved. In addition to full versions of the developed models, the authors have also made available a whole range of quantized versions in the most popular available formats, which allow the model to be run on your own computer.

– It is worth knowing that Bielik will work very well in the field of, for example, summarizing content. Our model is already useful in the scientific and business areas, for example, it can be used to improve communication with users when handling Helpdesk notifications - explains Szymon Mazurek from ACC Cyfronet AGH.

Why is it worth building Polish language models?

The creators of Bielik explain that artificial intelligence services operating on the Internet, including the most popular ones such as ChatGPT, are maintained on external servers. If a company or industry develops a solution that operates on specialist data, e.g. medical data or on texts that cannot leave the company for various reasons, e.g. are confidential, the only option is to launch such a model in-house. This model will not be as perfect as ChatGPT, but it does not have to be as general either.

An additional benefit of launching Bielik-type language models is strengthening Poland's position in the area of innovation in the AI sector. Moreover, as the creators emphasize, it is worth striving to build your own tools and thus become independent from external companies, which in the event of market turmoil, regulations or legal restrictions may, for example, prevent access to their resources. Thus, by developing and improving tools in Poland, we are building a stable base and are able to secure many of our sectors - banking, administrative, medical or legal.

– Intensive activities on the development of AI, language models such as Bielik or other tools based on artificial intelligence are in the interest of all well-functioning economies. We are observing intensified work on such solutions in many countries - explains Jan Maria Kowalski from the Speakleash Foundation.

The last fragment, explaining why it is worth developing language models in languages other than English, was generated by Bielik:

Developing language models in languages other than English can bring many benefits, both in linguistic research and in practical applications of technology. Here are some reasons why it is worth doing:

-

Better understanding of linguistic diversity: Language models based on English may not fully take into account the specifics of other languages, leading to biases or inaccuracies in understanding and translating content in other languages. Developing models for other languages allows for a better understanding of their grammar, vocabulary, and cultural context.

-

Improving translation quality: Language models play a key role in automatic text translation. By developing models for less popular languages, you can improve the quality of translations by eliminating errors and ambiguities resulting from a lack of understanding of the specifics of these languages.

-

Support for language communities: Developing language models for minority languages can have a positive impact on these communities, facilitating communication and access to information in their native language.

-

Opening the door to linguistic diversity research: Language models can help us study the similarities and differences between languages, which is crucial for understanding the evolution and divergence of languages around the world.

-

Improving AI tools: The development of language models in different languages broadens the scope of AI applications, enabling more advanced systems that use natural languages more precisely and accurately.

-

Preserving the world's linguistic diversity: The popularization of foreign languages often leads to the marginalization of local dialects and languages. Developing language models for lesser-known languages can help preserve linguistic diversity around the world.

Useful links:

Ability to test Bielik: bielik.ai

Current data collection: speakleash.org/dashboard